The Uncanny Valley of Operational Excellence: A 4-part Insurance Retrospective.

Part 2: The 95% Failure Rate and What It Tells Us About AI Implementation

A SparrowHawk Year-End Retrospective

Look, AI companies are building incredible technology. I need to say that up front because what follows might sound cynical, and it’s not.

I’ve seen demonstrations this year that genuinely impressed me. Document processing that parses handwritten notes in different languages. Natural language processing that understands insurance policy language. Predictive models that spot patterns humans would miss.

The technology is real. The potential is real. So why is everyone failing at it?

The MIT Reality Check

MIT found that 95% of generative AI pilots at companies are failing.

Ninety-five percent. Not “underperforming expectations.” Not “needs refinement.” Failing.

These are sophisticated companies with smart people and real budgets. My first thought was: That can’t be right. But the more conferences I attended, the more conversations I had with ops leaders, the more sense it made.

The problem isn’t the technology. The problem is how we’re implementing it.

The Personal AI Paradox

Most of us are using AI daily. ChatGPT, Claude, Gemini. Many of us pay for premium subscriptions because we’ve experienced the 5-10X productivity boost firsthand. I’ve even noticed a personal trend that “gpt’ing it” similar to how “googling” is slowly becoming synonymous with internet research. AI is embedded in our lives, and it’s not going anywhere soon.

So, naturally, when business executives and personal AI users saw this technology’s potential, the conclusion seemed obvious: “We need to deploy this company-wide.”

Unfortunately, the MIT study proves it’s not that simple. Personal AI success and enterprise AI success are entirely different problems. You can’t just scale “I use ChatGPT to write better emails” into “our entire underwriting operation now runs on AI.”

The gap between personal productivity and enterprise transformation is where $30-40 billion in AI investment is currently disappearing.

The Operational Opioid Crisis

I kept seeing the same pattern.

A company has operational pain. Submission backlogs, slow processing times, compliance gaps. They’re under pressure. Growth is outpacing capacity.

A vendor shows up with an AI solution. The demo looks great. “Implement in 90 days. See results immediately.”

Six months later? They’re either quietly shelving the project, manually fixing the AI’s mistakes, or claiming success while their team does workarounds in the background.

This is what I’m calling the operational opioid crisis. Companies are treating AI like a script farm, that doctor who sees 40 patients a day and every single one walks out with a prescription. Five-minute consultation, powerful medication, no real diagnosis of the underlying problem.

How can you see 40 patients a day and actually know the root cause? You can’t. You’re just prescribing solutions and hoping something works.

That’s what’s happening with AI implementation. Companies aren’t doing the hard work of understanding their own operations, which processes actually matter, what good outcomes look like, and where the real bottlenecks are. They’re expecting the AI vendor to diagnose problems the company hasn’t articulated.

AI vendors can help surface patterns and opportunities. But they can’t tell you whether your endorsement workflow should take 4 days or whether that’s a problem worth solving. That’s operational strategy work the company has to own.

Just like prescription medications, AI works incredibly well when it’s the right solution for a properly diagnosed problem. But when you’re throwing it at symptoms without understanding the disease? You get a 95% failure rate.

What Actually Needs to Happen First

The MIT study doesn’t just say “AI fails.” It explains why.

No Clear Problem Definition

Most companies can’t articulate what specific problem they’re solving. They know they have “operational inefficiency” but haven’t diagnosed where it comes from or why it happens.

AI needs specificity: “Endorsement processing takes 4 days because we’re manually checking 12 compliance requirements across 8 states, and 30% of the time we miss one.” That’s solvable. “We need to be faster” is not.

Data Isn’t Ready

Here’s what most companies get wrong: they assume AI needs perfectly clean, structured data before it can work. That’s old-school automation thinking.

Modern AI is built to handle messy data. It can parse inconsistent formats, work with natural language notes, and find patterns across siloed systems. The technology isn’t the bottleneck.

The problem is most companies don’t know: - Which data actually matters for which decisions - What “right” looks like to validate AI outputs against - Whether their data is even accessible across systems

What insurance operations typically have: policy data in the management system, submission notes in email, underwriting decisions documented inconsistently (or not at all), compliance documentation in shared drives with creative naming conventions, critical context buried in “comments” fields.

AI can work with that chaos. But if you can’t tell the AI what patterns matter, and you have no baseline to validate whether its outputs are correct, you’re not automating operations - you’re automating guesswork.

Processes Aren’t Standardized

If your team has 5 different ways to handle the same task, AI will learn from all of them. That’s not the problem.

The problem is: AI learns what’s common, not what’s correct.

If 60% of your team processes endorsements one way and 40% do it differently, the AI will likely adopt the majority pattern. But what if the 40% are actually doing it right? What if the common approach has been propagating errors for years?

This isn’t about AI needing perfect processes to function. Modern AI can handle variability. The issue is that you can’t validate whether AI is learning good patterns or just mimicking the most frequent ones when you don’t have a defined standard.

In insurance, where acquisition history means you’re running multiple instances of the same system with different workflows, this compounds fast. Even in the same office, underwriters will say “Well, I do it a little differently” for tasks their colleague eight feet away also handles.

AI doesn’t need you to fix that first. But you need to know which version is right so you can validate what the AI learns. Otherwise, you’re just codifying inconsistency at machine speed.

No Measurement Framework

“How will you know if the AI is working?”

“Um… it’ll be faster?”

That’s not a measurement framework. That’s a hope. You need baseline metrics, target outcomes, leading indicators, and failure criteria. If you can’t measure it, you can’t manage it.

What Separates that 5% That Succeed?

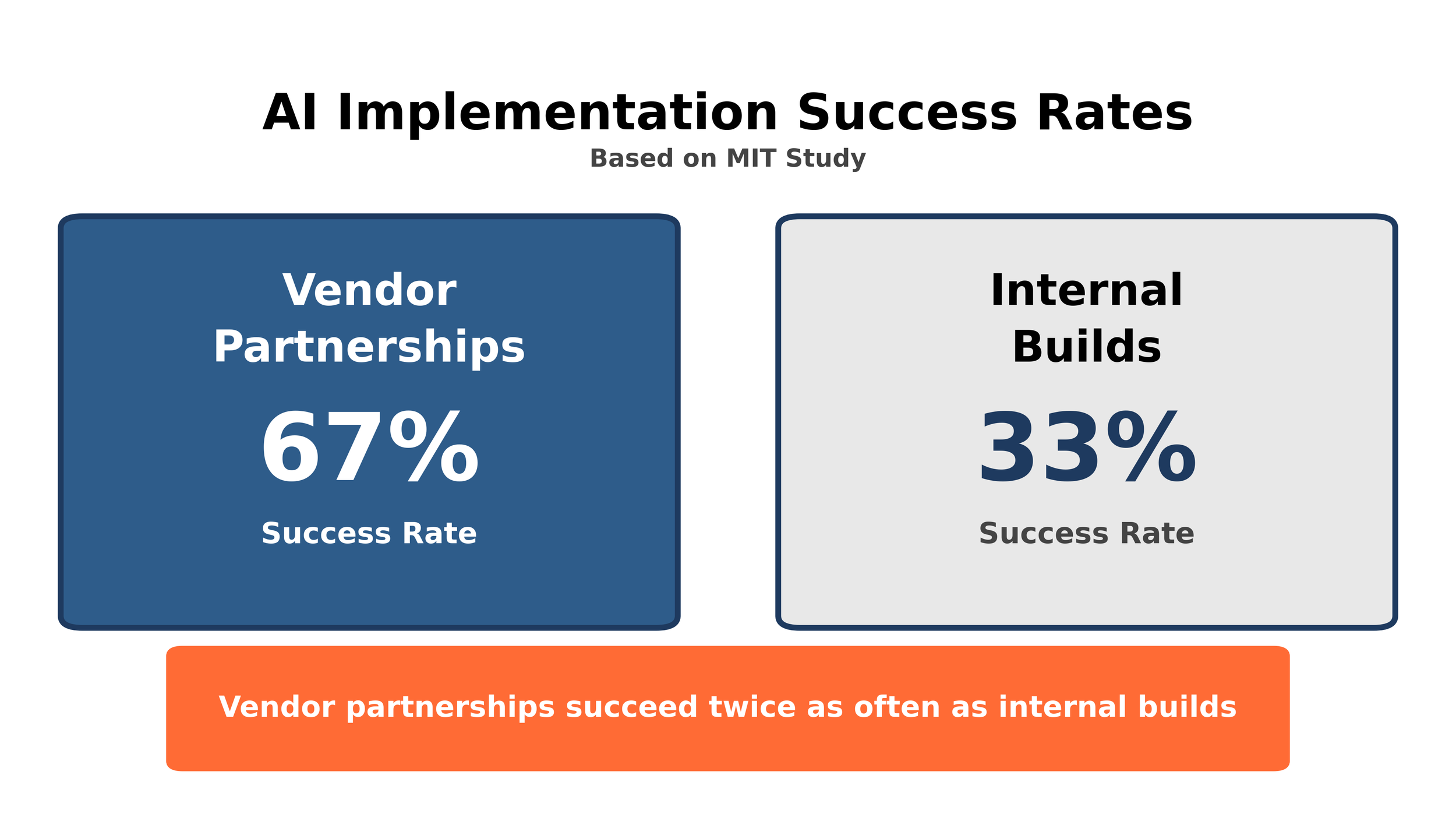

Companies partnering with vendors succeed twice as often as companies building AI in-house. The MIT study found vendor partnerships achieve a 67% success rate, while internal builds succeed only 33% of the time.

And here’s the kicker: back-office operations deliver the highest ROI, yet get the smallest share of AI budgets. Most companies pour resources into sales and marketing AI pilots. The MIT study shows that’s where ROI is lowest. The real returns? Compliance monitoring, reconciliation, process automation, document processing.

This matters for insurance because policy administration, compliance, and claims operations are back-office functions. They’re not sexy. They don’t make headlines. But they’re where AI actually works.

The Specialization Question

The MIT findings aren’t just about AI. They’re about specialization versus generalization across any operational transformation.

Generic offshore BPO firms approach insurance much like they approach every other industry. They follow scripts and decision matrices without understanding context or nuances of the great behemoth that is insurance fluency. Endorsement processing is administered the same way that finance reconciliation is often handled. They optimize for cost per FTE, not for the quality of outcomes.

They’re the equivalent of companies building AI in-house without domain expertise. High adoption rates, low transformation, part of that 33% failure cohort.

Specialization is just as mission critical for AI as outsourcing. Purpose-built for insurance workflows. An ideal partner for both approaches must understand policy language, compliance requirements, and coverage implications. They know when a submission requires senior underwriter review rather than routine processing. They optimize for measurable outcomes, not just cost reduction or their own margin padding.

You can hire a generic BPO firm to “process submissions faster,” the same way you can buy generic AI to “improve efficiency.” Both sound good in a demo. Both fail when they encounter actual insurance complexity: state-specific compliance requirements, the difference between admitted and surplus lines, and the nuance of when a routine endorsement becomes coverage-changing.

This is why SparrowHawk Group has been deliberate about our approach to AI and how we position our services to both implement and be complemented by it. We’re not trying to be the cheapest offshore option. We’re not racing to join the mass of “AI-powered” operations advertisements currently flooding the market. We’re focused on being the specialized partner that actually works when tested against insurance complexity.

In complex, regulated industries with domain-specific requirements, specialization isn’t a luxury.

It’s the difference between the 5% that succeed and the 95% that fail.

Where We Stand

We’re implementing AI in 2026. That’s the direction.

We’ve spent 18 months understanding what’s actually breaking in insurance operations: backlogs, capacity constraints, and compliance gaps that keep underwriters up at night. Understanding the pain tells you whether AI is the right prescription or just another expensive placebo.

The MIT study shows vendor partnerships outperform internal builds 2-to-1. But we think there’s an important distinction: this isn’t about selecting a vendor. It’s about finding a partner who enables us to deliver AI-infused outsourcing solutions that actually empower our clients’ operations.

That requires true partnership, not a transactional vendor agreement. Someone whose success is measured by our clients’ outcomes, not software licenses sold. Someone who understands that back-office excellence is what allows MGAs to scale without breaking.

We’re selective because our clients depend on us getting this right. We’ll share details when we have production results, not pilots.

The Uncomfortable Truth

Most AI implementations fail not because the technology is bad, but because companies skip the foundation work. They implement solutions before defining problems. They automate processes before standardizing them. They deploy AI before their data is ready. They measure vibes instead of outcomes.

The companies succeeding with AI aren’t rushing to be first. They’re the ones willing to do the boring, unglamorous work of getting their operations ready first.

What’s Next

We’ve established: The E&S market is growing faster than operational capacity (Part 1). AI is real technology, but 95% of implementations fail because companies skip the foundation (Part 2).

Which raises the question: What specific operational problems are breaking, and what’s the actual root cause?

In Part 3, we’ll examine the talent misallocation problem. The real reason operations are breaking, and why technology alone won’t fix it.

Spoiler: It’s not that you don’t have enough people. It’s that you’re using expensive people for cheap work.

Sources: